Data for AI

Harness the power of data with Relu Consultancy web crawling solutions.Grow your business in the market direction with the right data at the right time.

Our Partners and customers

.svg)

.svg)

.svg)

.svg)

.svg)

.svg)

.svg)

.svg)

.svg)

.svg)

%20(1).svg)

.svg)

.svg)

.svg)

.svg)

.svg)

.svg)

.svg)

.svg)

.svg)

.svg)

.svg)

%20(1).svg)

.svg)

Why Relevant Data Is an Enterprise Success?

Data-driven decisions are essential for businesses to thrive today. For training AI models, large datasets are important for natural language processing and large language model applications. With Relu get the relevant data for effective training of your AI models.

Requirement Discovery

Get on a discovery call with us to share your specific requirements.

Crawler Configuration

Our team will configure crawlers to deliver efficient data extraction.

Structured Extraction

We follow a format while extracting and structuring the data.

Data Delivery & Integration

We deliver the structured data into your system and provide ongoing support for smooth integration and continuous flow.

Post-Delivery Support

Post delivery, we are always available to assist you with issues or updates.

.png)

Our Enterprise Web Crawling Solutions

Scalability

We offer scalability for storing your large volume of data without any space hindrance. Get an option to increase your scalability as and when required.

Read More

Scalability

We offer scalability for storing your large volume of data without any space hindrance. Get an option to increase your scalability as and when required.

Anti-bot measure

We have advanced technologies to bypass antibot measures for seamless data access. Ensure uninterrupted data scraping from the most secure and protected websites.

Read More

Anti-bot measure

We have advanced technologies to bypass antibot measures for seamless data access. Ensure uninterrupted data scraping from the most secure and protected websites.

Precise data

We commit to providing only accurate, and reliable data. We deliver data only after a rigorous validation process to ensure you receive precise data.

Read More

Precise data

We commit to providing only accurate, and reliable data. We deliver data only after a rigorous validation process to ensure you receive precise data.

Customized data

We provide data that tailor your business requirements solely. We understand every business's unique needs and align with the specific goals.

Read More

Customized data

We provide data that tailor your business requirements solely. We understand every business's unique needs and align with the specific goals.

Data expert support

Get complete access to our data expert guidance. Our team of professionals is 24*7 available to assist you with any queries or challenges.

Read More

Data expert support

Get complete access to our data expert guidance. Our team of professionals is 24*7 available to assist you with any queries or challenges.

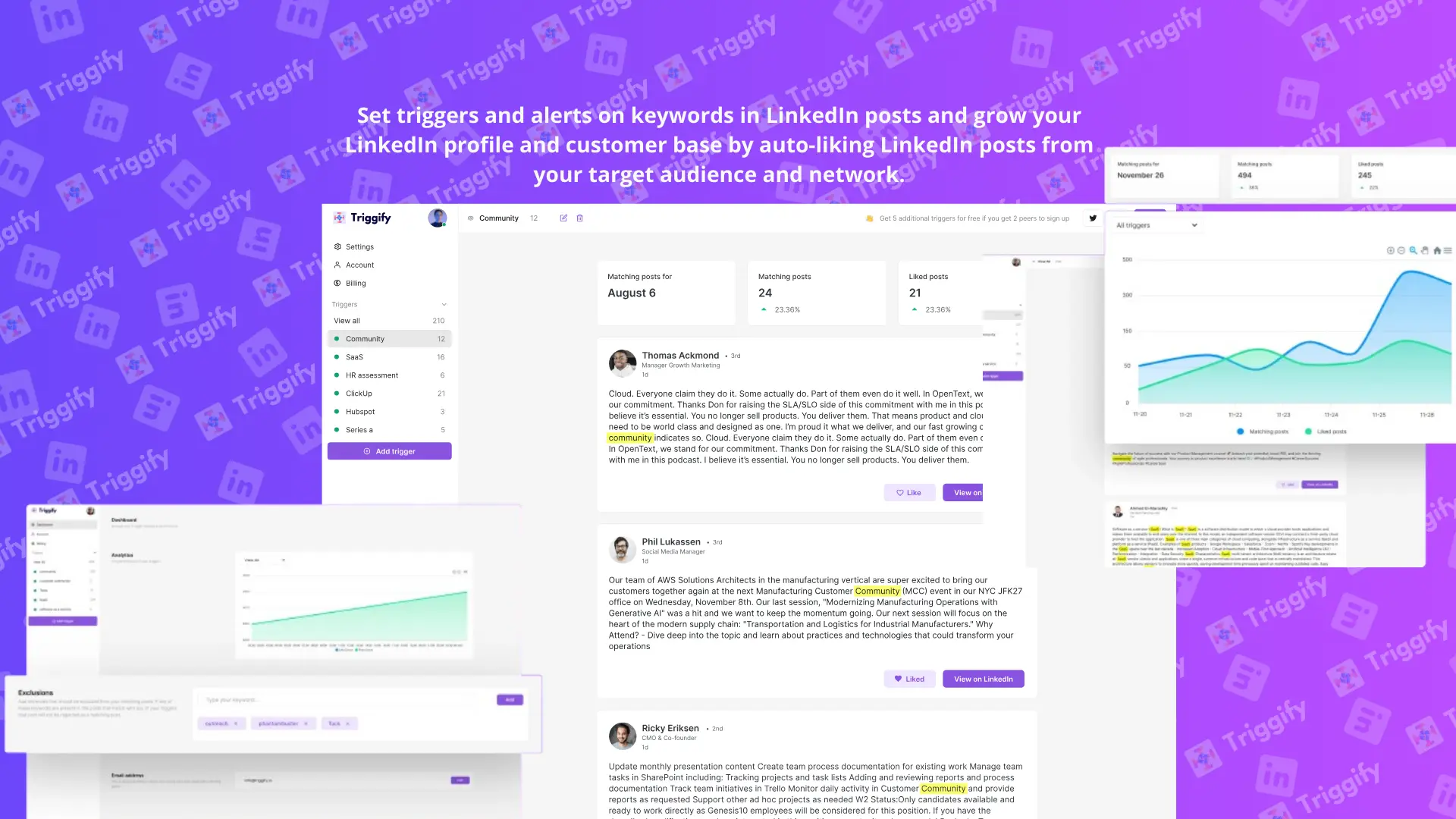

Benefits of Automating with No-Code Tools

If you are still confused about the applications and the benefits of No-code automation services, read further to understand how automating your tasks using no-code tools helps businesses of all sizes.

Boost productivity

Free your team from repetitive tasks, allowing them to focus on higher-value activities.

Reduce costs

Eliminate manual labor and streamline processes, leading to significant cost savings.

Increased accuracy

Automate tasks prone to human error, ensuring consistent and accurate results.

Scalability

Fostering optimism for a brighter future in all endeEasily adapt your automated workflows to changing business needs, and grow your operations efficiently.avors!

Improved customer experience

Automate repetitive tasks related to customer service and support, enhancing customer satisfaction.

Services that we do here to explain

Get Quote

Frequently Asked Questions

Find answers to common questions about our services and policies in our FAQ section.

For further help, contact our support team

What kind of data can you crawl?

How do you ensure data accuracy and quality?

Can you handle websites with complex structures or login requirements?

Can you integrate your web crawling solution with our existing data warehouse or cloud storage platform?

How can I monitor the progress of my web crawl and track the amount of data collected?

%20(1).webp)

.webp)

%20(1).webp)

%20(1)_compressed.webp)

%20(1)%20(1)%20(1).webp)

%20(1).webp)

.png)